From the standpoint of SEO, it is extremely important to configure the Magento 2 robots.txt file for your website. A robots.txt file specifies which pages of a website should be indexed and analyzed by web crawlers. The robots.txt file plays a big role in SEO.

SEO is about sending the right signals to search engines — and robots.txt for Magento 2 is one of the best ways to communicate your crawling preferences to search engines.

What is Robots.txt?

Basically, robots.txt files tell search engine crawlers which URLs on your site they can access. This is mostly for preventing overloading your site with requests. Robots.txt files are mainly intended to manage the activities of good bots, since bad bots aren't likely to follow the instructions. To keep a web page out of crawls, block it with noindex or password-protect the page or website.

Why is Robots.txt Important?

In addition to helping you divert search engine crawlers away from the less important pages on your site, robots.txt for Magento 2 can also provide the following benefits.

- This can help prevent the appearance of duplicate content. Sometimes your website might require more than one copy of a piece of content. For example, if you create a printable version of a piece of content, you may have two different versions. Google has a well-known duplicate content penalty. This would allow you to avoid that.

- Using Magento 2 robots.txt, you can hide pages in development from being indexed before they are ready.

- Also, there may be pages on your website that you do not want the public to see — for example, you might have added only a simple product. Another example would be a thank you page after someone has made a purchase or registered. These pages should not appear on a search engine, making it pointless for Google or other search engines to index them.

How to Configure Robots.txt for Magento 2

Please follow the below steps to configure your robots.txt file in Magento 2.

Step 1: Log in to your Admin Panel.

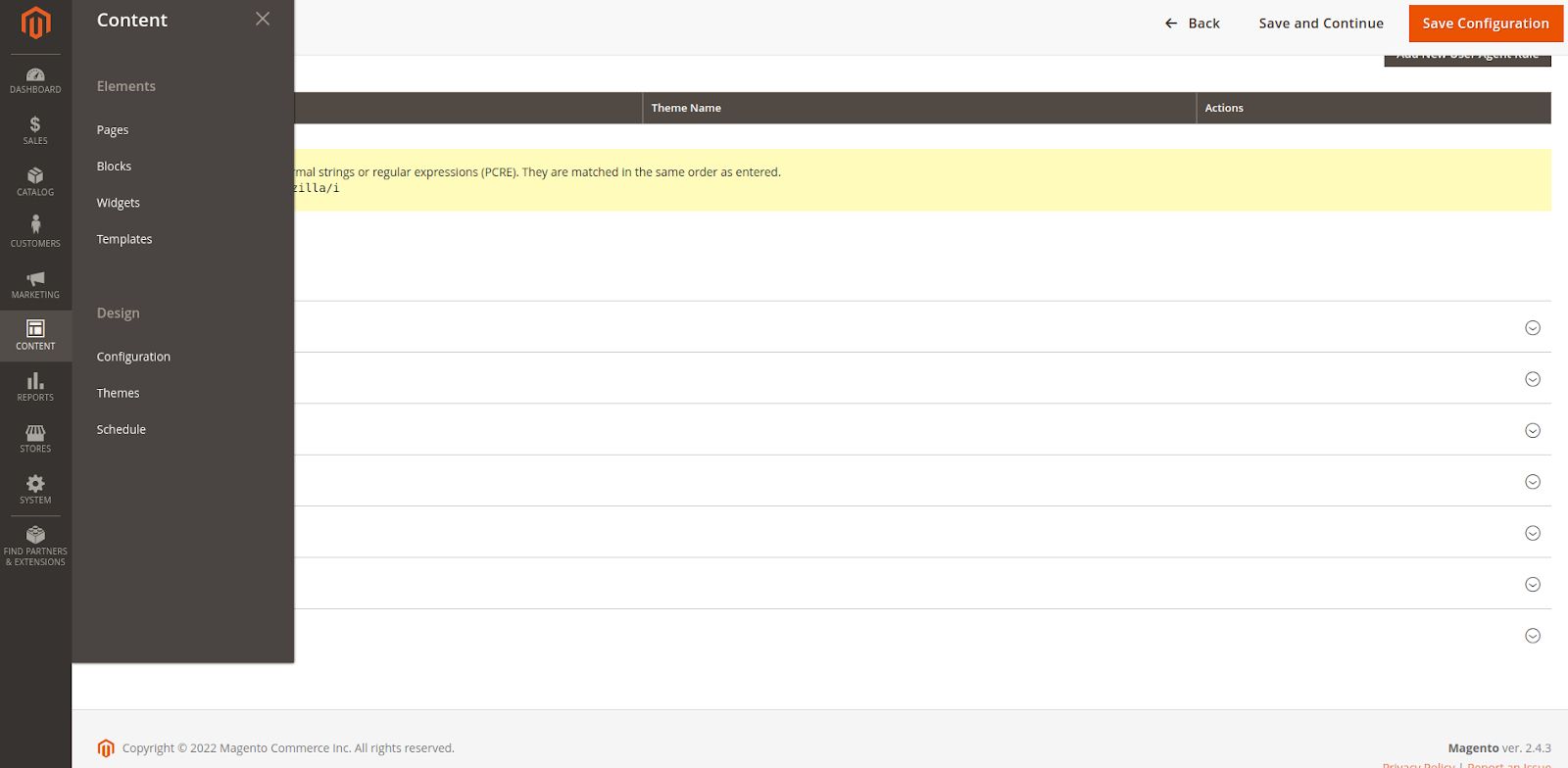

Step 2: Go to Content > Design> Configuration.

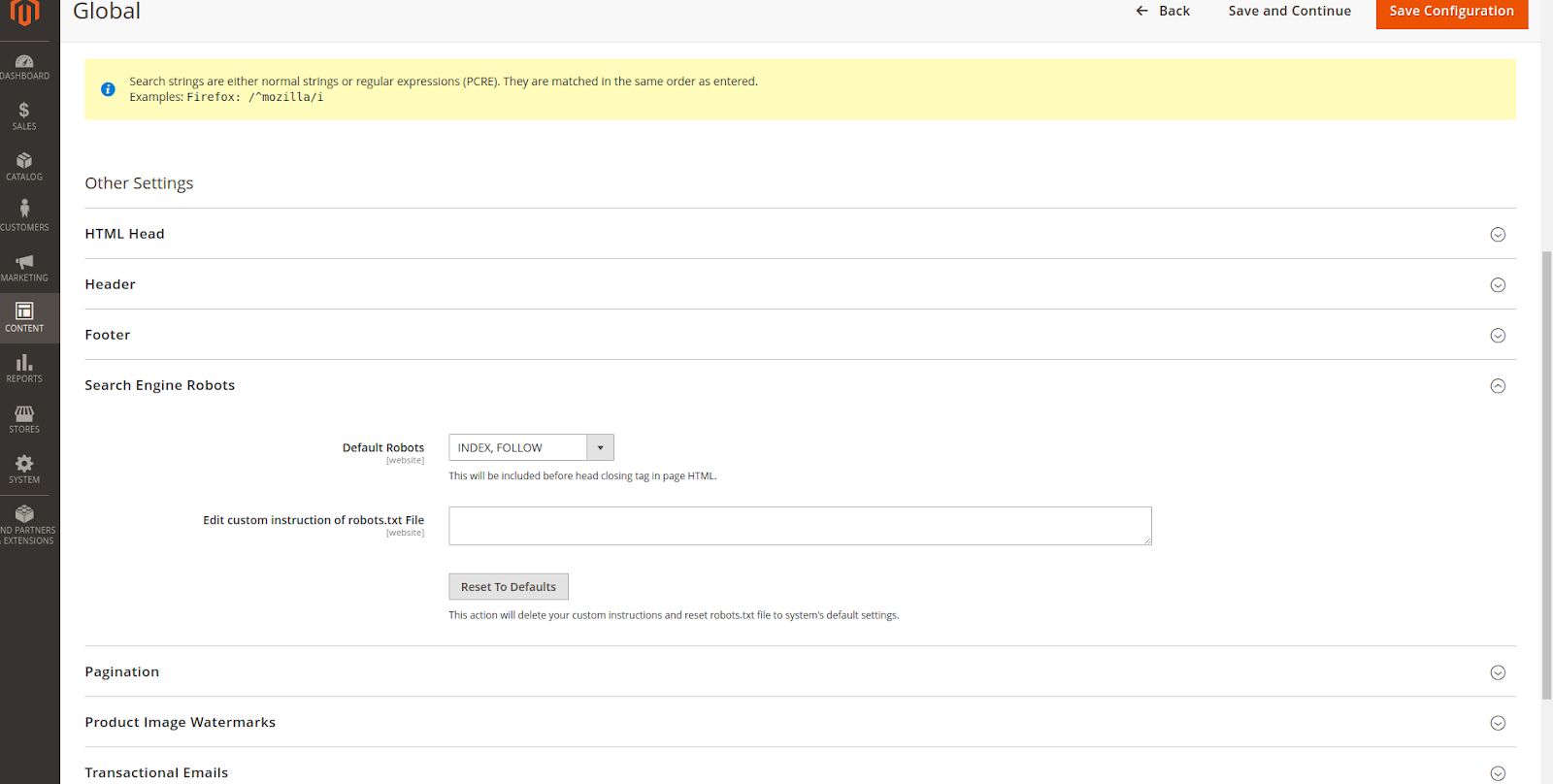

Step 3: In the opened grid, find the line Global, click the Edit link, and open the Search Engine Robots tab.

Step 4: Now choose what Default Robots you need from the following:

- INDEX, FOLLOW: Search engines will regularly index your pages and check the changes.

- NOINDEX, FOLLOW: The search engine bot won’t index your website but it’ll still monitor changes.

- INDEX, NOFOLLOW: Search bot will index your store once but never come back to track the changes.

- NOINDEX, NOFOLLOW: This setting will hide your store from search engine bots.

Step 5: The Edit custom instruction of the robots.txt File line allows you to write custom instructions. To reset the default settings, click the Reset To Default button, which will remove all your customized instructions

Step 6: Click on Save Configuration to apply the changes.

Magento 2 Robots.txt Custom Instructions Examples

As we mentioned above, you can define custom instructions to the Magento 2 robot.txt configurations. Here are some examples:

Allow Full Access

User-agent:*

Disallow:

Disallow Access to All Folders

User-agent:*

Disallow: /

Block Google Bot From a Folder

User-agent: Googlebot

Disallow: /subfolder/

Block Google Bot From a Page

User-agent: Googlebot

Disallow: /subfolder/page-url.html

Default Instructions

Disallow: /lib/

Disallow: /pkginfo/

Disallow: /report/

Disallow: /var/

Disallow: /catalog/

Disallow: /customer/

Disallow: /review/

Disallow: /*.php$

Restrict Checkout Pages and User Accounts

Disallow: /checkout/

Disallow: /onestepcheckout/

Disallow: /customer/

Disallow: /customer/account/

Disallow: /customer/account/login/

Restrict Catalog Search Pages

Disallow: /catalogsearch/

Disallow: /catalog/product_compare/

Disallow: /catalog/category/view/

Disallow: /catalog/product/view/

Disallow Duplicate Content

Disallow: /tag/

Disallow: /review/

How to Add Sitemap to Robots.txt file in Magento 2

A sitemap is an XML file which contains a list of all of the webpages on your site as well as metadata (metadata being information that relates to each URL). In the same way as a robots.txt file works, a sitemap allows search engines to crawl through an index of all the webpages on your site in one place.

To enable Submission to Robots.txt in Magento 2, you need to follow these steps:

Step 1: Log in to your Admin Panel.

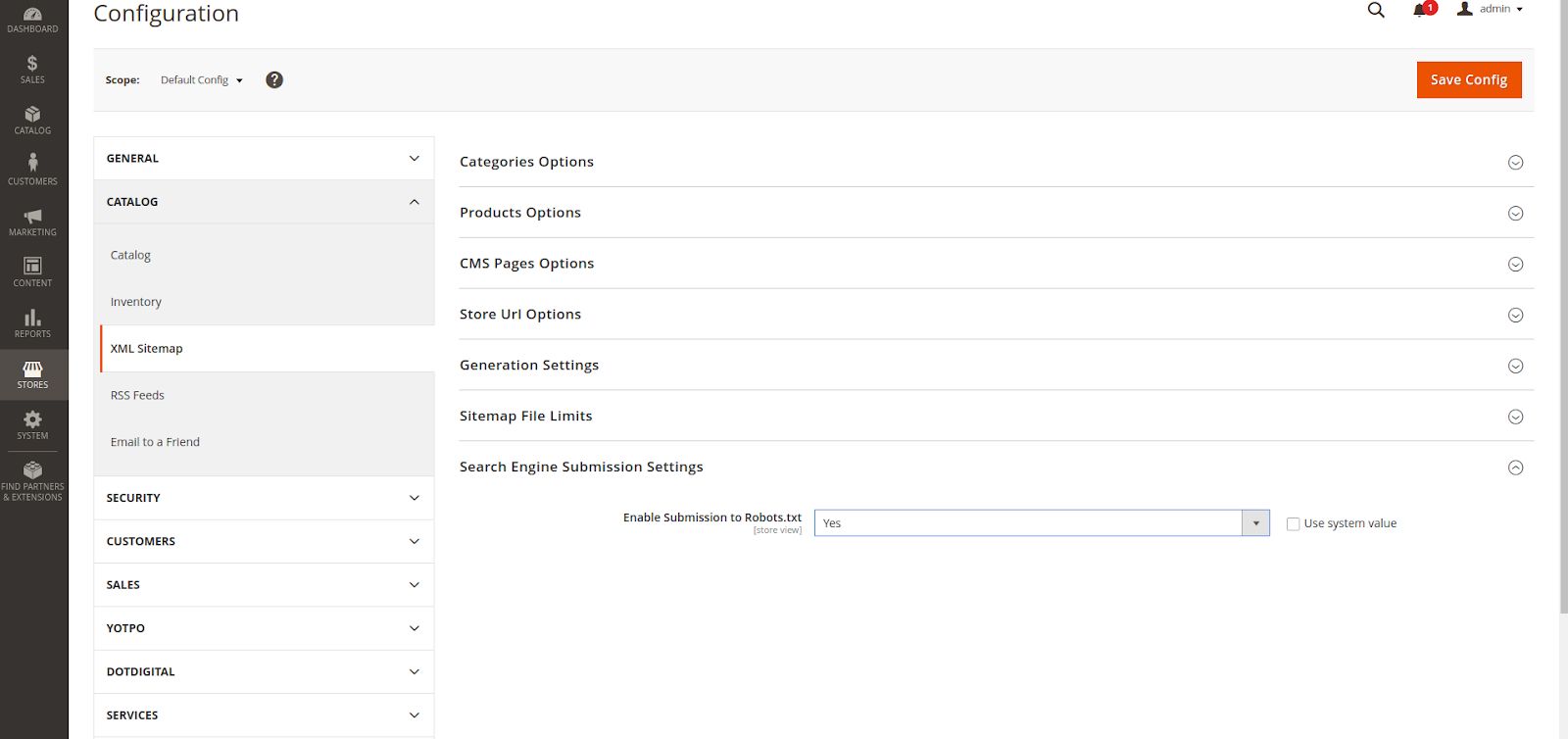

Step 2. Go to Stores > Settings > Configuration and choose XML Sitemap in the Catalog tab.

3. Open the Search Engine Submission Settings tab and set Yes in the Enable Submission to Robots.txt dropdown.

4. Click on the Save Config button.

If you would like this option to be enabled automatically, navigate to Content > Design > Configuration > Search Engine Robots, and in the Edit custom instruction of the robot.txt File field, set the instructions to add the sitemap as follows:

Sitemap: https://magefan.com/pub/sitemaps/sitemap.xml

Sitemap: https://magefan.com/pub/sitemaps/blog_sitemap.xml

Sitemap: https://magefan.com/pub/sitemaps/sitemap_ua.xml

Sitemap: https://magefan.com/pub/sitemaps/blog_sitemap_ua.xml

It will be easier for search engines if you have a robots.txt file that specifies the latest sitemap instead of crawling every page of the site then finding them days later. If your site is not performing well and the web pages are not indexed correctly, your position within the search engine results is compromised. For the performance of your site, it is also very important to keep the website optimized and this Magento 2 speed optimization article can further help.

Now You Know

Now you know how to create Magento 2 Robots.txt for your website.

If you’re on Magento, consider fully managed Magento hosting from Nexcess. Our plans include Varnish, PHP 7+, an integrated CDN, and image compression for top-tier performance.

Take advantage of our complimentary free Magento site migrations and switch to Nexcess today.

Explore our fully managed Magento plans to get started.